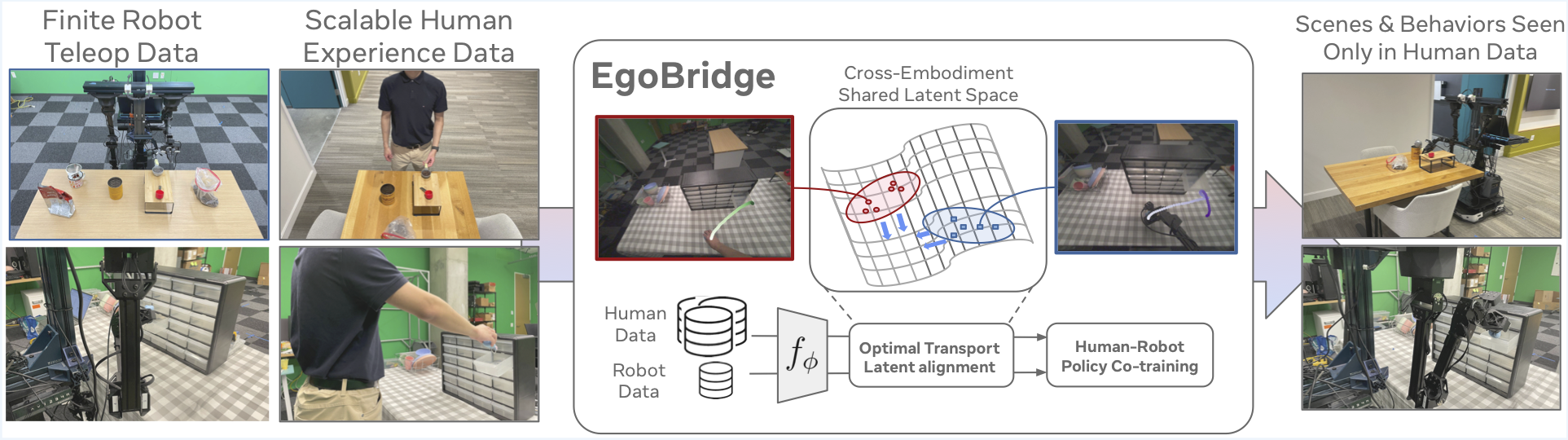

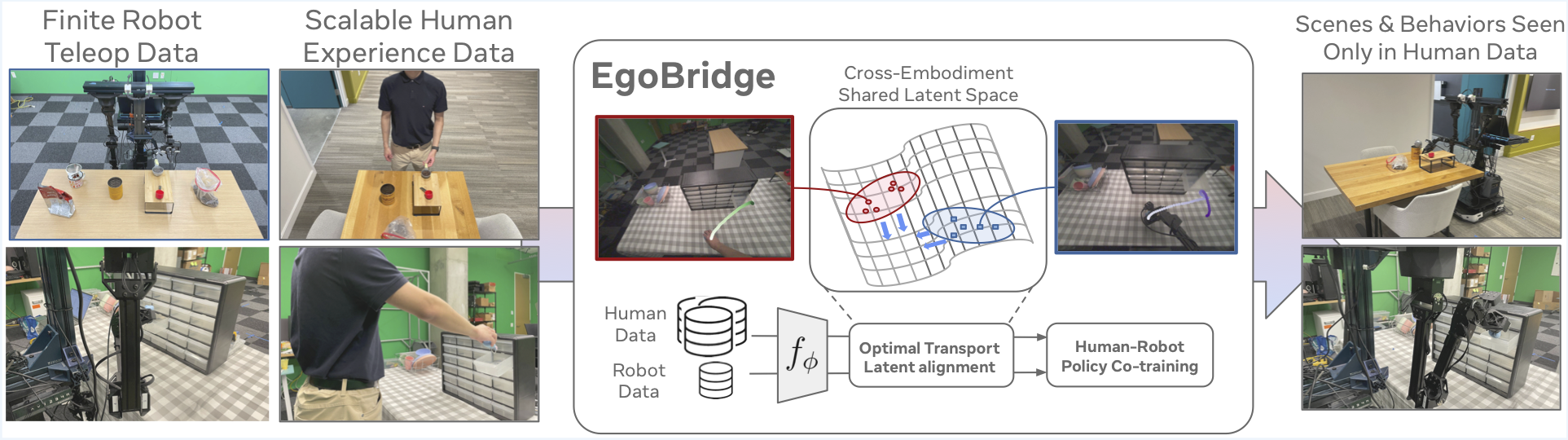

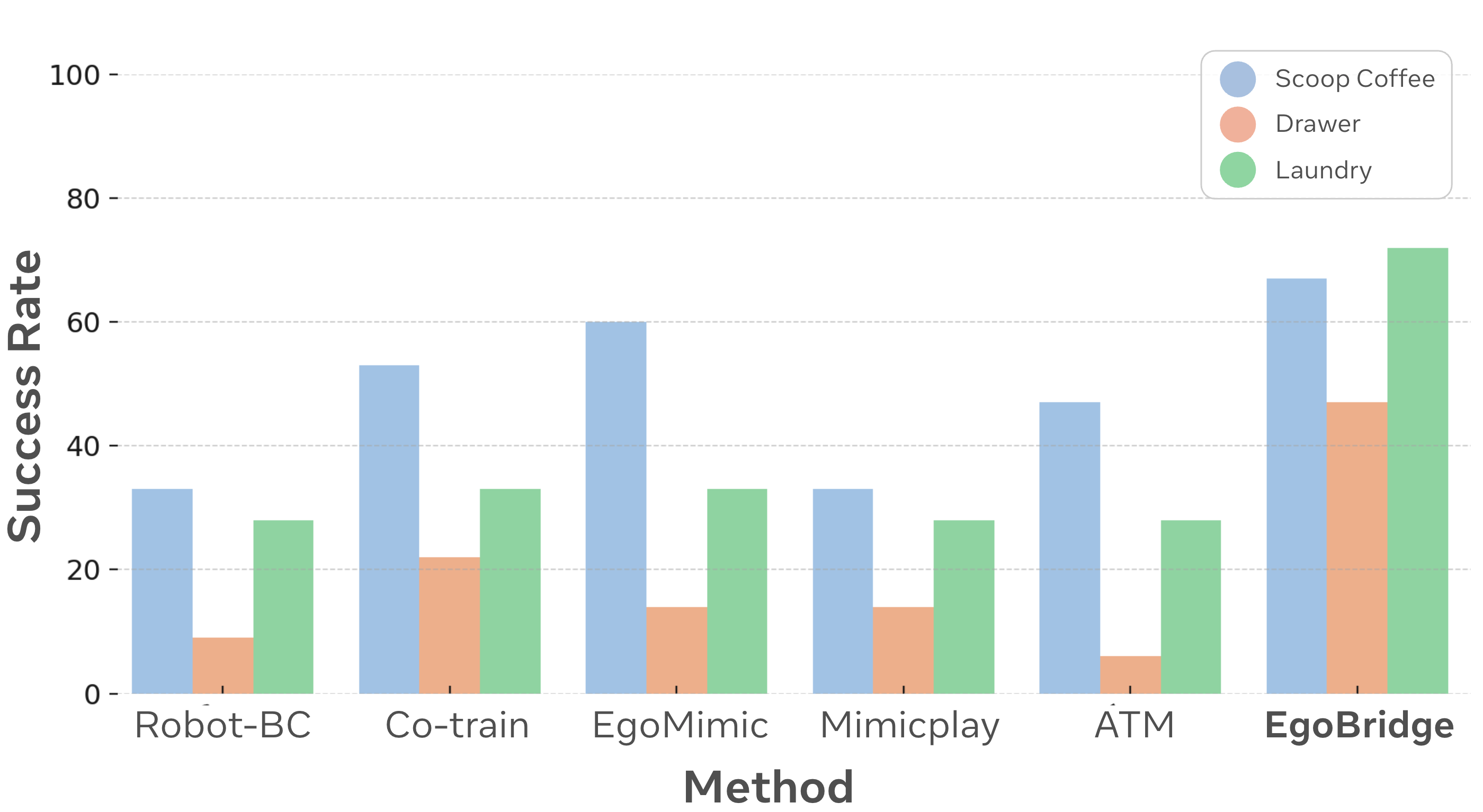

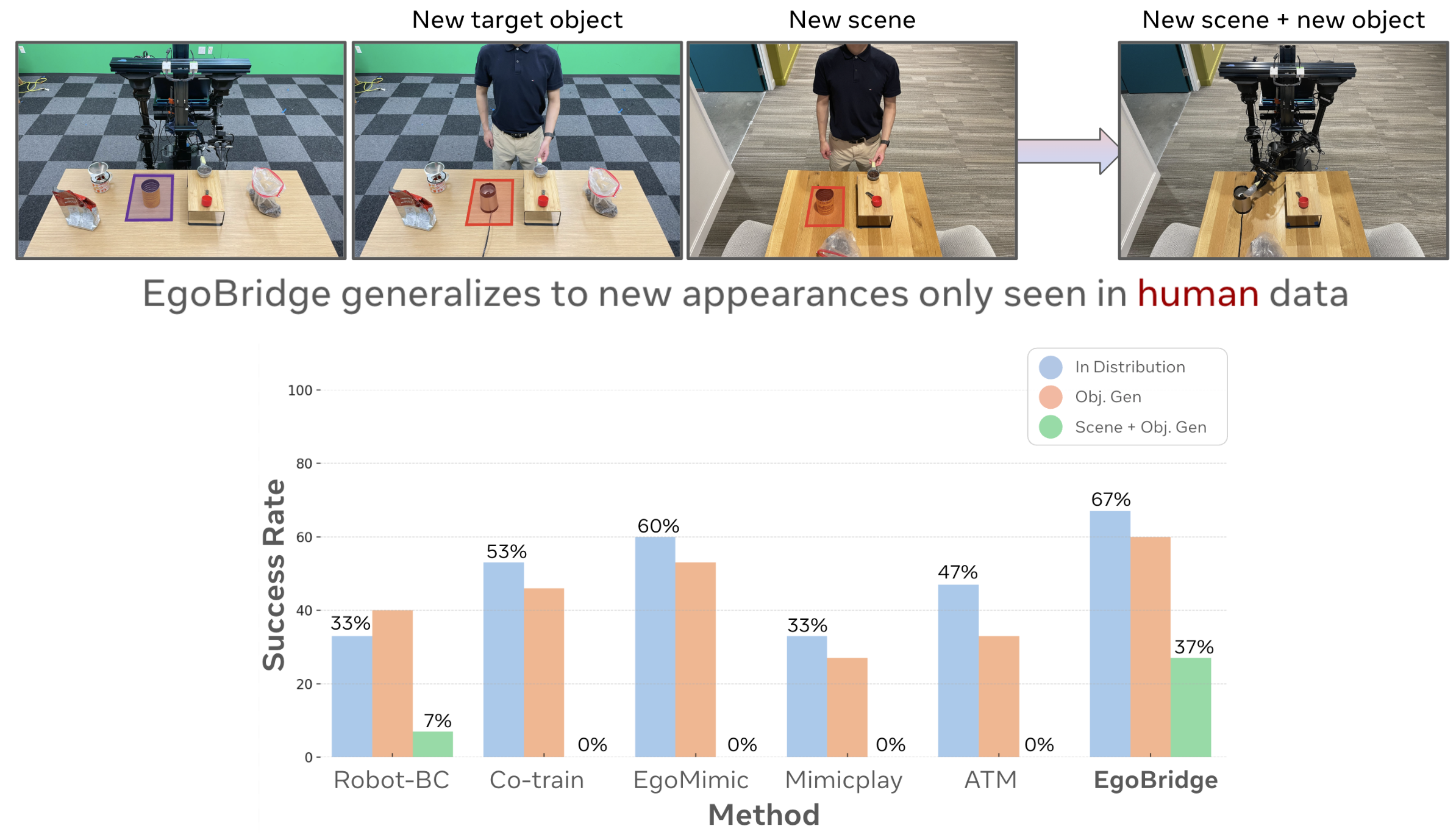

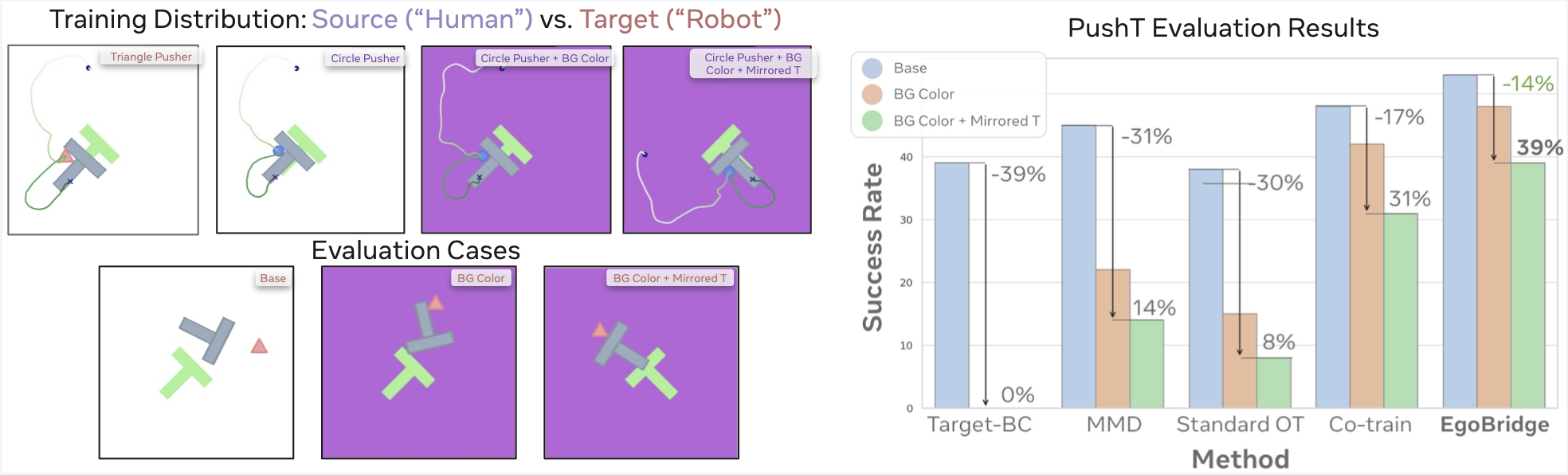

Egocentric human experience data presents a vast resource for scaling up end-to-end imitation learning for robotic manipulation. However, significant domain gaps in visual appearance, sensor modalities, and kinematics between human and robot impede knowledge transfer. This paper presents EgoBridge, a unified co-training framework that explicitly aligns the policy latent spaces between human and robot data using domain adaptation. Through a measure of discrepancy on the joint policy latent features and actions based on Optimal Transport (OT), we learn observation representations that not only align between the human and robot domain but also preserve the action-relevant information critical for policy learning. EgoBridge achieves a significant absolute policy success rate improvement by 44% over human-augmented cross-embodiment baselines in three real-world single-arm and bimanual manipulation tasks. EgoBridge also generalizes to new objects, scenes, and tasks seen only in human data, where baselines fail entirely.

We presented EgoBridge, a novel co-training framework designed to enable robots to learn effectively from egocentric human data by explicitly addressing domain gaps. By leveraging Optimal Transport on joint policy latent feature–action distributions, guided by Dynamic Time Warping cost on action trajectories, EgoBridge successfully aligns human and robot representations while preserving critical action-relevant information. Our experiments demonstrated significant improvements in real-world task success rates (up to 44% absolute gain) and, importantly, showed robust generalization to novel objects, scenes, and even tasks observed only in human demonstrations, where baselines often failed.

@misc{punamiya2025egobridgedomainadaptationgeneralizable,

title = {EgoBridge: Domain Adaptation for Generalizable Imitation from Egocentric Human Data},

author = {Ryan Punamiya and Dhruv Patel and Patcharapong Aphiwetsa and

Pranav Kuppili and Lawrence Y. Zhu and Simar Kareer and

Judy Hoffman and Danfei Xu},

year = {2025},

eprint = {2509.19626},

archivePrefix= {arXiv},

primaryClass = {cs.RO},

url = {https://arxiv.org/abs/2509.19626}

}